Part Three: How Does the Anatomy Framework Rate the Current Generative AI Wave?

This is Part Three of a Three-Part Series on the Anatomy of an Innovation Wave. In Part One, I introduced the Anatomy Framework for assessing the intensity of a new technology. In Part Two, I developed five characteristics of technologies that had more disruptive potential. In this post, I will assess the current generative AI wave against these five characteristics.

Criteria One: Innovation at all Layers

Under the first category of disruption, generative AI has a fairly broad impact across all layers of the technology stack.

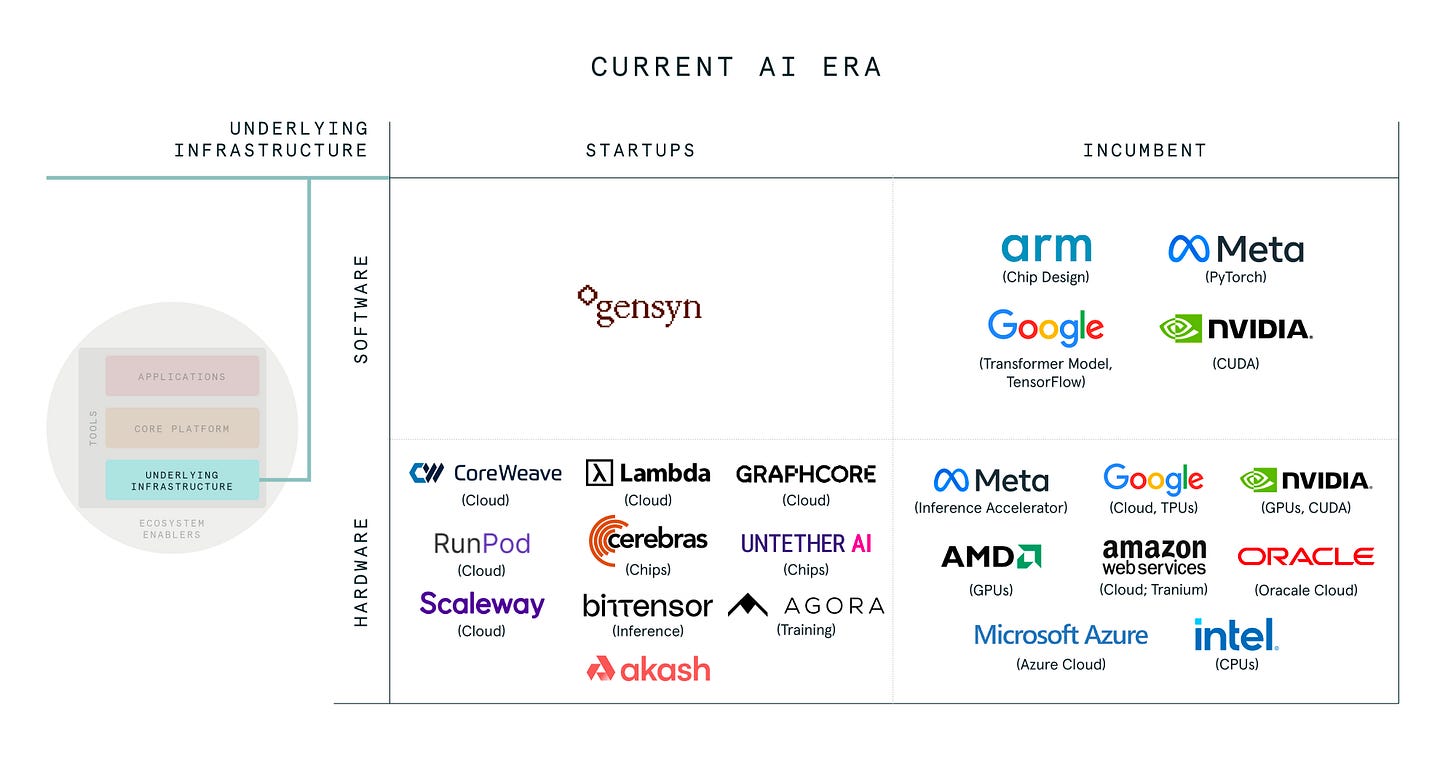

Underlying Infrastructure

There are a number of key components in the underlying infrastructure. One of the biggest dependencies limiting broad scale training and implementation of LLMs is the availability of compute, namely GPUs. The supply chain for compute will include GPU design and manufacturing companies like NVIDIA, foundries like TSMC and the various cloud service providers like Azure, GCP and AWS.

Underlying infrastructure is not exclusively hardware, however, as software plays a key component to the recent acceleration of AI. The transformer architecture introduced by Google in 2017 is a deep learning model that was a necessary breakthrough to allow for the current generation of GPTs. CUDA is NVIDIA’s computing platform and API that enables developers to utilize GPUs for general purpose computing. Other examples of software on this layer necessary to create the core platform include PyTorch and TensorFlow. The combination of software and hardware in the underlying infrastructure layer provides the foundation for the new core platform.

Core Platform

Foundation models are the new core platform developed in the age of generative AI and LLMs. The landscape largely breaks out into models trained for generalized versus specific tasks and proprietary v open source. For example, proprietary generalized models would include OpenAI’s GPT-4, Google’s PaLM2 and recently announced Gemini, and Anthropic’s Claude. Examples of proprietary models for specific tasks include Character.AI (chat), Inflection (personal assistants), and Med-PaLM 2 (medical).

On the open source side, Llama 2 for generalized and the respective models for specific tasks like Llama Chat and Code Llama. Similarly, MosaicML’s open source generalized model MPT-30B and the respective fine tuned models MPT-30B Instruct (instruction following), and MPT-30B Chat (dialogue). Other open source models worth mentioning include Falcon, Mistral 7B, and even an Arabic-language specific model Jais, a 13B open source model by Cerebras and G42.

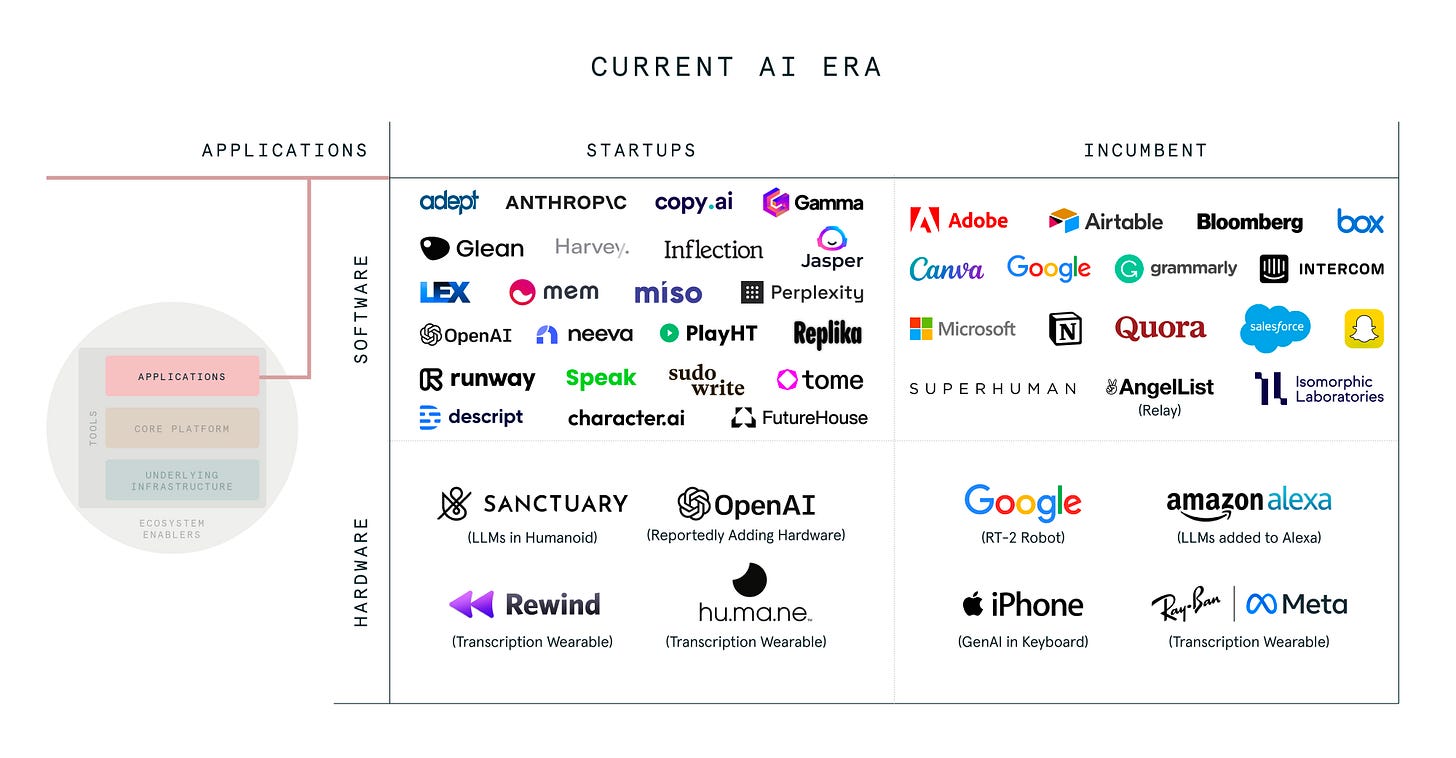

Applications

While developers are accessing core platforms via APIs, most consumer end users first experienced generative AI through an application. These applications were likely chatbots, like ChatGPT, image generators like Stable Diffusion, MidJourney and Dalle, and creative tools like Tome and Sudowrite. On this layer, you can see below both startups and incumbents incorporating the new generative AI platform into their applications.

Tools

Al tools are evolving incredibly quickly, in part because AI applications are also new and developers are still optimizing best practices. Currently, broad categories of AI tooling include the following: (1) vector databases that provide for efficient storage and retrieval of multidimensional vector databases such as Pinecone and Qdrant, (2) frameworks like LangChain for developing LLM-powered applications, (3) data frameworks like LlamaIndex that enable LLMs to access private or domain-specific data through PDFs, APIs and SQL databases, (4) deployment tools like Replicate to provide infrastructure to run models and APIs and BentoML that brings models from training to deployment.

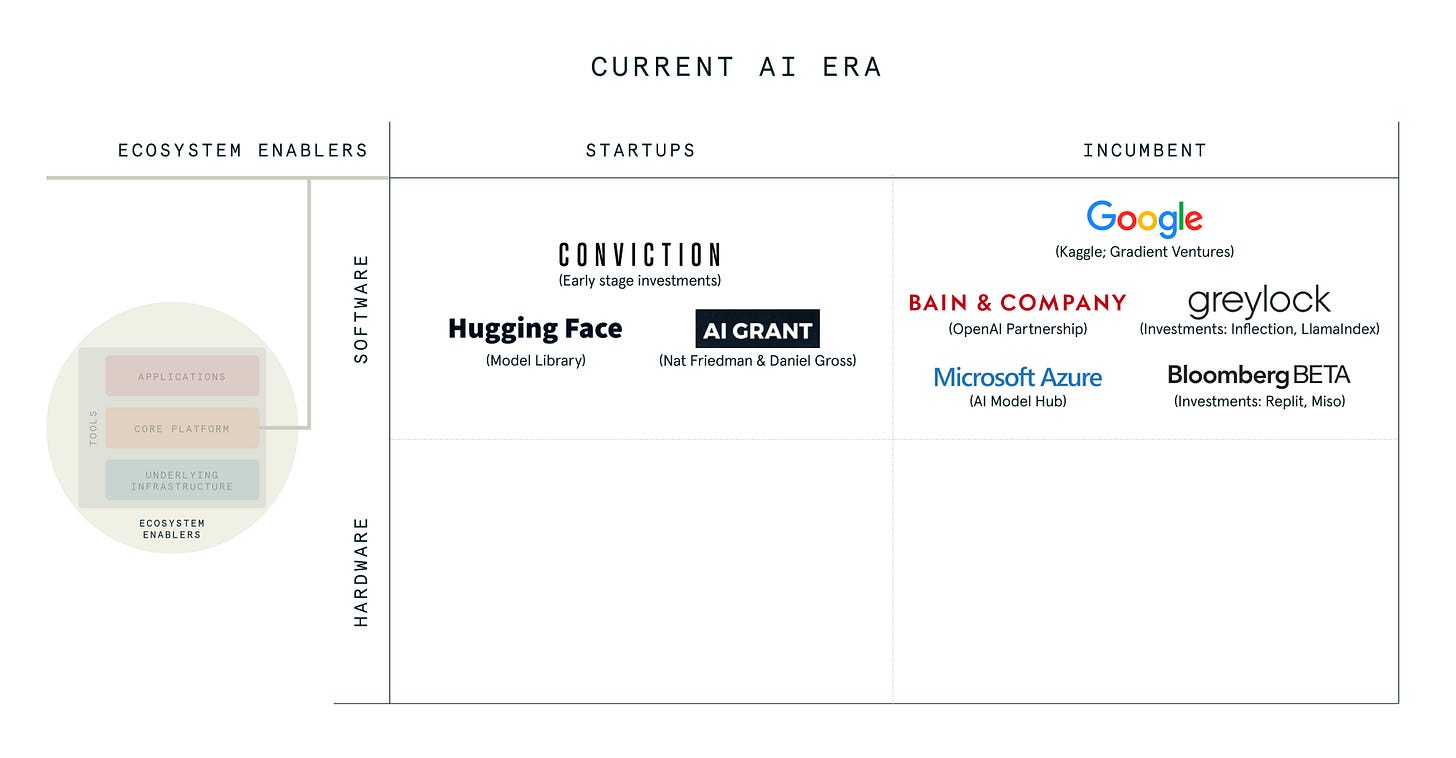

Ecosystem Enablers

Finally, ecosystem enablers play a crucial role in supporting the growth and adoption of AI technologies. These enablers take the form of marketplaces like Hugging Face, consultants like PwC, and alliances like Bain and OpenAI and the AI Alliance. Venture Capital firms, both longstanding firms like 500 Global and new firms that are AI specific like Conviction or AI Grant, are also in this category for providing capital and other forms of support for the innovators. These enablers provide the necessary infrastructure, funding, and strategic guidance to facilitate innovation and adoption in this rapidly evolving field.

Criteria Two: Is There An Innovation Rebound?

Upward innovation starting from the underlying infrastructure layer and irrupting into the surrounding layers is only half the picture in AI. The increasing number AI developers building on the core platform and application layers has supercharged innovation back down in the underlying infrastructure layer. The demand has been so acute that the “compute crunch” has resulted in product delays, inflated street prices for GPUs, and even reports of recycling graphics cards for gaming into AI GPUs.

The crushing demand for the new technology at the higher layers has caused more innovation at the underlying infrastructure layer. The reigning champ Nvidia has the H100 processor which is currently the most powerful GPU that was already 30x faster for LLMs than previous generations. Given the demand for GPUs, they recently announced the H200 which will deliver improvements on memory and bandwidth for more complex LLM workloads. As would be expected, AMD has also announced that their MI300A will be in production in 2024 and claims to be four times as fast as the H100. The innovation is not limited just to the chip design, of course, as innovation is pushing packaging to the upward limits. Chip on wafer on substrate (CoWoS), for example, has allowed the H100’s to have high bandwidth memory but is also suspected to be one of the reasons for the the compute crunch.

It’s not just the usual suspects innovating at the underlying infrastructure. Each of the large technology giants have also began entering that race. Google was ahead of this trend by developing TPUs. Microsoft recently announced Maia, their AI chips designed for LLMs, Amazon has announced Trainium2, an AI chip for training LLMs, and Meta’s chip called MTIA targets inference workloads.

Perhaps most excitedly, it’s not just incumbents like Nvidia and the CSPs starting to innovate here, but even startups are responding to demand for more infrastructure as we discuss below in Criteria Three.

Criteria Three and Four: Innovation Agents and Media Mix

A key characteristic in the generative AI wave has been how thoroughly startups and incumbents have embraced the new technology. As would be expected with new technologies, startups have largely led the way in LLM innovation as exemplified by OpenAI, Anthropic, Inflection, Mistral, etc. However, large tech incumbents from Google (PaLM2 and Gemini), to Amazon (Olympus) to Meta (Llama2) have also launched their own LLMs to compete. In addition, there are a few non-AI native tech incumbents that have moved exceedingly fast to integrate AI to become AI-first, e.g. Notion, Canva, Replit, Dropbox, etc. Perhaps the clearest sign that a major disruption is under way is that even OG tech founders like Google co-founder Sergey Brin have come back from retirement for one more mission.

As referenced earlier, innovation in AI has been in both hardware and software. Shortly after launching Bard, Google showed off its Robotic Transformer RT-2 that was trained on an LLM to allow it to more easily understand instructions. Even Apple is rumoured to be hiring engineers to bring LLMs on device which requires both hardware and software innovations. Even startups are innovating in the hardware space, such as Lambda Labs and RunPod in the cloud infrastructure space, and Cerebras, Graphcore and Groq innovating on microprocessors for AI training and inference. Perhaps one of the biggest indicators is the rise of startups doing hardware on the application layer, for example hu.ma.ne, Rewind AI, and the rumoured AI device from OpenAI, Jony Ive and Masayoshi Son. Finally, as expected, there are software advances in the underlying infrastructure such as mixture of expert models and retrieval augmented generation (RAG) that will improve the latency and reliability of LLMs.

Criteria Five: Foundation for Future Innovations

It’s too early to know whether generative AI provides the foundation for future innovation waves the way the internet was a super platform. We do know, however, that LLMs are being incorporated into other technologies and we are seeing breakthroughs that were previously hard to come by.

One example is in the area of math and science. The blog post by Google DeepMind talks about their paper in Nature, which describes using functional search (FunSearch) as part of an evolutionary method using LLMs. The result was that they were able to come up with solutions to open math problems that were previously unknown. According to the blog:

This work represents the first time a new discovery has been made for challenging open problems in science or mathematics using LLMs. FunSearch discovered new solutions for the cap set problem, a longstanding open problem in mathematics. In addition, to demonstrate the practical usefulness of FunSearch, we used it to discover more effective algorithms for the “bin-packing” problem, which has ubiquitous applications such as making data centers more efficient.

Given the innovations back on the compute layer with more advanced chips, other areas that can be built on top might be autonomous vehicles and AR/VR. On AV, the new chips may be better suited to generate realistic 3D models of the real world and to generate synthetic data that can be used to test an AV in a safer and more efficient way. On AR/VR, generative AI can help with breakthroughs on providing higher resolution quality graphics and reduced latency to minimise motion sickness.

Scorecard

Based upon the above, here’s how I would rank the disruptive potential of the current generative AI wave:

Innovation at all layers: high

Innovation rebound: high

Startups and incumbents: high

Hardware and software: moderate

Foundation for future innovation: TBD with potential