The Great Unbundling of Google Search

The unlikely challengers to Google’s home field advantage for informational search and why I’m bullish on Google.

“Gentlemen, there’s only two ways I know of to make money: bundling and unbundling.” -Jim Barksdale, CEO Netscape

Bundling and Unbundling of Search

Google has been in the bundling game for search since its inception. They started with websites, then made it the company’s mission to “organize the world’s information.” This meant adding books, images, videos, maps, and even information about the ocean floor. To fight the natural compartmentalism of search results, Google launched universal search back in 2007 to continue to force the bundle.

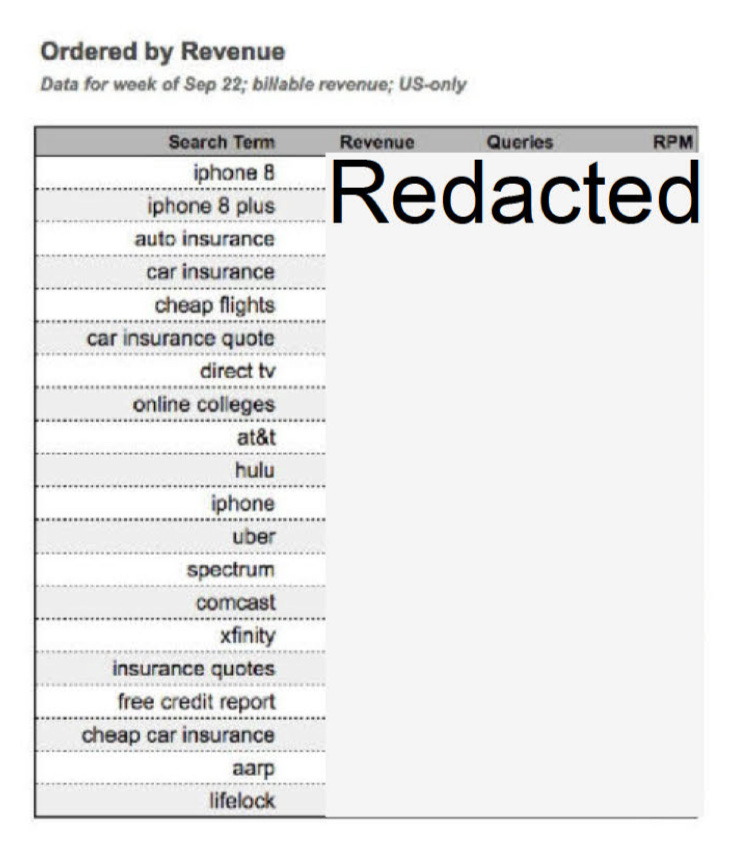

To be sure, various attempts at unbundling Google search has been going on for a long time. As shown in the image below, the most monetizable queries are often for insurance, travel, and some navigation queries (to specific sites). I’m skipping over the “iphone” related queries on my speculation that was due to the imminent iPhone launch and not representative of steady state queries.

It’s no wonder that the travel vertical has seen a lot of competition, e.g. Kayak, Tripadvisor, Airbnb, and that Booking.com is one of Google’s largest advertisers.

Types of Searches

If vertical search unbundling has been happening for a while, what is different about this moment in time? It helps to break out the categories of types of searches by the following:

Navigational queries: searches made to retrieve the URL of a specific website for which the user is looking. Examples of navigational searches from the list above include Uber, Comcast, Xfinity, Hulu and AT&T.

Informational queries: searches made to learn about a topic or answer a question. Commonly preceded by “how to” or “what is,” informational queries can be for factual, educational, news, financial, scientific or other content.

Transactional queries: searches made in connection with a purchase or transaction. Examples include “best digital camera,” or “hotels in LA”, or “restaurants with dosa”.

As you can imagine, most of the vertical search unbundling has historically been in the third category, transactional queries, because they tend to be the most monetizable. Keywords associated with booking travel, searching for insurance, or purchasing an e-commerce item, will have higher advertiser interest.

In the past, very few sources were specifically targeted at stripping away informational queries from Google, but it has happened. Wikipedia and Reddit have become common substitutes for Google, although the searches still very often start on Google. Social search, on the other hand, rarely starts on Google anymore. Web 2.0 began to unbundle social search from Google with the rise of LinkedIn, Facebook, Twitter, Instagram and now TikTok.

Rise of LLMs for Informational Search

LLMs are not an efficient way to conduct navigational searches, and agents are not yet reliable enough to complete transactions on the user’s behalf. However, LLMs are a very effective complement to—and eventually a substitute for—informational searches on Google. For many queries, users just want an answer and not have to conduct a secondary search of ten blue links to arrive at that answer.

Why does the threat to informational search matter if most of the monetizable queries are in the transactional search category? For one, the inability of agents to conduct transactions is a temporary state. Many startups are now working on agents to complete transactions, not to mention the frontier model companies that are incorporating agents in the underlying model itself.

But the real threat to Google is that their decades-long monopoly on informational search blunted the attempts to unbundle transactional searches. That is, if you were already on Google researching different neighborhoods in New York for an upcoming trip, you may as well book your hotel directly on Google. However, if you were doing your New York neighborhood research using ChatGPT, it would require the same amount of effort to navigate to Google as it would directly to Booking.com to book your hotel. In that world, Google must compete alongside other vertical search engines on an even playing field.

Once informational searches begin occurring outside of Google, Google loses home field advantage, and the effect of unbundling transactional searches becomes even more acute. This is further compounded by the fact that Google’s search results page is currently a very effective source of traffic for Google’s own properties such as YouTube, maps, images, shopping, etc.

Have We Seen This Before?

And now, for my favorite question: have we seen this before? In other words, is there any evidence to suggest that unbundling less monetizable information searches from Google leads to fewer transactional searches on Google? Wikipedia and Reddit are not exactly great platforms for commercial transactions, so taking information search share from Google shouldn’t affect monetizable searches.

Except that is exactly what is happening. When social search began unbundling from Google, there was a downstream effect of taking more monetizable searches from Google as well. Google’s SVP Prabhakar Raghavan confirmed that about “40% of young people when they’re looking for a place for lunch, they don’t go to Google Maps or Search, they go to TikTok or Instagram.”

Gen X and millennials might have made “google” a verb, but Instagram and TikTok are now the preferred search engines for Gen Z when seeking local results. According to a new study of 1,002 U.S. consumers, Google is now in third place for 18 to 24-year-olds. Source

Who Benefits?

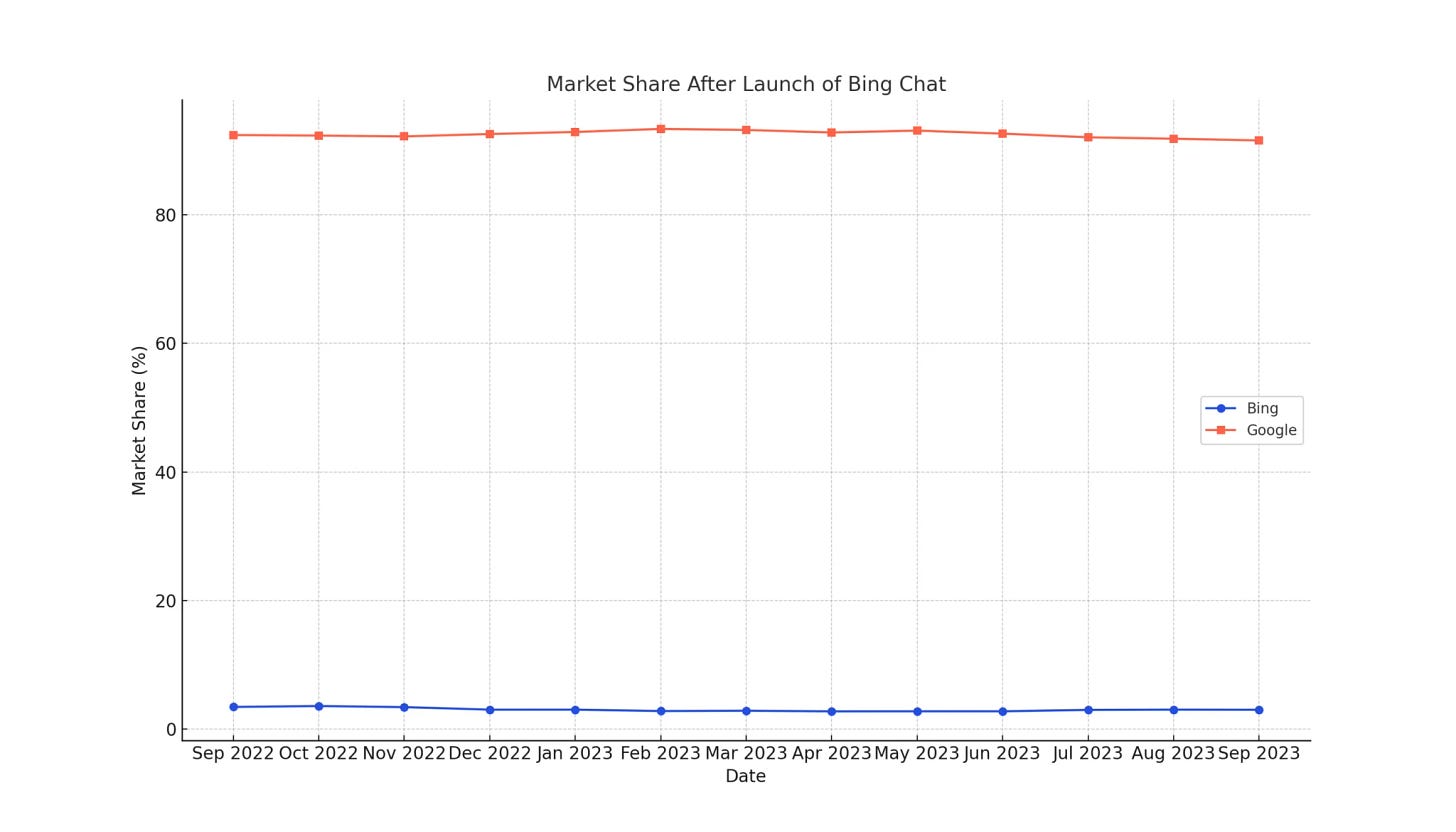

Who benefits from this? The obvious competitor is Microsoft with Bing search. Anecdotally, after Microsoft’s integration of OpenAI’s latest GPT model in search, I did something I’ve never done before: download the Bing app. Apparently I’m not the only one, as Bing saw a 15% surge in daily visits following their GPT integration. However, the spike was not sustained as Bing ended the year with very modest gains in search market share.

For Microsoft, that’s besides the point of course, because after many years of being hassled by Google’s side hustles, Microsoft finally was able to use its own side project to harass Google’s core business. I wrote previously about how Satya could hardly hide his delight and had no qualms about calling Sundar out to dance (to which Sundar recently responded that Google is listening to their own music).

Benefiting more than Microsoft, however, are some unlikely companies that historically have no business competing in search, namely Meta and, of course, Apple. Let’s start with Meta. They released Llama 3 in April and demonstrated state-of-the-art open source models can be as performant as GPT-4. Fewer consumers adopt open source over proprietary models, so they also did something potentially more disruptive: Meta integrated Llama 3 into the Meta AI assistant across their properties of Facebook, Messenger, Instagram and WhatsApp. There are over 3 billion people who use one of Meta’s properties on a daily basis (daily average of 3.24 billion as of Q1’24). These users can now remain on Meta property to conduct informational searches with Meta AI instead of going to Google.

Apple also is an unlikely competitor to Google search. To be sure, Apple has attempted to use Spotlight to attract queries, which raised the alarm bells at Google. An internal Google presentation in 2014 wrote: “We expect these suggestions to siphon queries away from Google in verticals where Spotlight is triggered.” According to the NYT, however, “Apple has not used Spotlight to crib so-called commercial queries — which feature ads in their results — from Google, so the tool has not hurt Google’s search business.” The same report shows that Google paid Apple $18 billion in 2021 to make Google search the default search on Apple devices. To borrow an old Facebook term, the two companies have always had a relationship that is “complicated,” but it’s about to become more fractured.

Just like information search has become embedded across Meta’s properties, that same capability will soon be available across iOS devices. All eyes will be on WWDC24 next month on whether Apple will integrate a third party LLM to power information search on iOS. I believe the demos we saw last week from OpenAI and Google I/O were ways to court Apple on the multimodal, multilingual and conversational capabilities of each company to be the AI assistant for Apple.

Once information search becomes ubiquitous, Google loses its home field advantage to convert information searches into more monetizable transactional searches. Moreover, once agents are reliable to complete longer horizon tasks, transaction searches may go away for everyone. Instead, people will be paying for completed tasks (either directly or indirectly), which is exactly what Google did for advertising when they shifted the industry from a CPM to a CPC (or more even more so the CPA) model.

Why I’m Bullish on Google

It’s not all doom and gloom for Google. Given the above, it might be a surprise for me to say that the company best positioned for the unbundling is Google. But here’s why: the company to benefit most from this post-LLM world will have the following trifecta: (1) wide consumer distribution, (2) capability to develop a state of the art frontier model, and (3) a variety of properties into which these frontier models are embedded.

Apple has the consumer install base and a variety of properties (eg. iPhone, iPad, iTunes, AirPods, AppleTV), but at least at this stage they would need to rely on a third party for their frontier model. Meta has the frontier model and the consumer base, but their properties are limited to the social media/messaging vertical. Only Google is in the unique position of having an existing consumer install base, a state of the art model, and a variety of properties (Maps, Gmail, Pixel, Docs) into which their AI models can be integrated.

The second reason all hope is not lost for Google is that answers from AI may actually increase the number of queries. That may seem counterintuitive, but we have seen that in the past. It’s well known that Google has been maniacally focused on speed to deliver search results as a competitive advantage. Less known is that increasing the time to deliver search results actually reduces the number of searches people made. Giving users answers faster leads them to ask more questions.

“All other things being equal, more usage, as measured by number of searches, reflects more satisfied users. Our experiments demonstrate that slowing down the search results page by 100 to 400 milliseconds has a measurable impact on the number of searches per user of -0.2% to -0.6% (averaged over four or six weeks depending on the experiment). That's 0.2% to 0.6% fewer searches for changes under half a second!” -Google blogpost Speed Matters

It is still early to know whether we see the same effect with answers from AI, but the initial data is promising for Google. In a recent interview, Sundar Pichai confirmed that if you put content and links into their AI Overview, they get higher click through rates than outside AI Overview. If this holds, it contradicts the common wisdom that answers from AI will cannibalize Google search, and we will see Google quickly incorporate LLMs into information search.

Perhaps the most important reason I’m bullish on Google is that they incorporated Gemini answers into Google search results (called AI Overview) before it was perfect. Almost immediately people began dunking on Google for the various gaffs from AI Overview. Below is just one example, and there are some other egregious screenshots in this thread, all unconfirmed as they are screenshots.

Why would I be bullish on Google for releasing answers that should embarrass them? Because the biggest risk to Google is to become a company that has too much to lose, and therefore fails to experiment and innovate. Companies at that scale will usually be on the losing end of the innovator’s dilemma because product launches will be blocked by a myriad of functions. But if I were a competitor to Google, I’d be worried, because releasing AI Overview despite the gaffs means Google shed its inability to launch and learn.

Given estimates of 8.5 billion queries per day, even if Gemini were 99% accurate, the 1% hallucination rate would mean 85 million potential screenshots per day of Google providing comically wrong answers. At Google’s scale, that would certainly result in many gaffs. The flipside, however, is that Google has a scale of potential learning and optimization that no other company has today. So if Google can begin to reduce that number, and their models will only get better from here, watch out.